TimescaleDB vs. QuestDB: Performance benchmarks and overview

Choosing the right database is a careful exercise. We feel the best way to help you cut through the marketing noise is to provide the most rigorous benchmarks available, and then to put the results in context.

TimescaleDB and QuestDB are two popular open-source time-series databases. TimescaleDB positions itself as an enhanced PostgreSQL for faster analytics, while QuestDB is purpose-built for raw performance, low latency, and high throughput.

To that end, this article contains a deep performance comparison of TimescaleDB and QuestDB.

Introducing TimescaleDB & QuestDB

Both Timescale & QuestDB aim to help developers scale time series workloads.

According to DB-Engines, the two break down as such:

| Feature | QuestDB | TimescaleDB |

|---|---|---|

| Primary database model | Time Series DBMS | Time Series DBMS |

| Implementation language | Java (zero-GC), C++, Rust | C |

| SQL? | Yes | Yes |

| APIs | ILP, HTTP, PGWire, JDBC | JDBC, ODBC, C lib |

TimescaleDB is a time series database built atop PostgreSQL. It's provided under two licenses: Apache 2.0 and the proprietary Timescale License. It's an extension of PostgreSQL, rather than a standalone database. For many in the PostgreSQL ecosystem, it provides a familiar entry point into time series workloads while retaining full PostgreSQL compatibility.

Want to see a QuestDB comparison vs. InfluxDB? Read the article

On the other side, QuestDB is an open-source time series database licensed under Apache License 2.0. It's built from the ground up for maximum performance and ease-of-use, and has been tempered against the highest throughput, highest cardinality ingestion cases. It's also built atop open formats such as Parquet to avoid vendor lock-in. QuestDB offers very fast querying, accessible via SQL-based extensions.

Performance benchmarks

Before we get into internals, let's compare how QuestDB and TimescaleDB handle both ingestion and querying. As usual, we use the industry standard Time Series Benchmark Suite (TSBS) as the benchmark tool.

Ingestion benchmark results

Starting with ingestion, how do the two compare?

The hardware used is the following:

-

AWS EC2 r8a.8xlarge instance with 32 vCPU and 256 GB RAM (AMD EPYC)

-

GP3 EBS storage configured for 20,000 IOPS and 1 GB/s throughput

And on the software side:

-

Ubuntu 22.04

-

TimescaleDB 2.23.1 on PostgreSQL 17.6

-

QuestDB 9.2.2 with the out-of-the-box configuration

The benchmark compares ingestion speed between QuestDB and TimescaleDB using the TSBS cpu-only use case. Data is sent via 32 concurrent connections. We test three scale factors representing different cardinality levels:

↑ Higher is better

| Scale (hosts) | Rows | QuestDB (rows/sec) | TimescaleDB (rows/sec) | QuestDB Advantage |

|---|---|---|---|---|

| 100 | 1,728,000 | 4,023,402 | 313,113 | 12.9x faster |

| 1,000 | 17,280,000 | 7,483,735 | 1,244,709 | 6.0x faster |

| 4,000 | 69,120,000 | 9,532,172 | 1,177,506 | 8.1x faster |

| 100,000 | 172,800,000 | 11,360,000 | 1,034,797 | 11.0x faster |

| 1,000,000 | 576,000,000 | 7,330,000 | 620,267 | 11.8x faster |

QuestDB starts at 4 million rows per second and scales up to 11.4 million rows per second at 100K hosts. This demonstrates QuestDB's ability to maintain and even improve throughput as data volume increases.

On the other hand, TimescaleDB's performance peaks at around 1.2 million rows per second for 1,000 hosts, then degrades at higher cardinality—dropping to 620K rows/sec at 1M hosts.

In most benchmarks in the time series space, high cardinality data is where performance profiles start to really diverge. QuestDB's ingestion rate is consistently 6x to 13x superior to TimescaleDB across all tested cardinality levels.

It should be noted that TimescaleDB's performance characteristics are influenced by PostgreSQL's row-based architecture, which presents challenges when scaling time-series data workloads.

Query benchmark results

As part of the standard TSBS benchmark, we test several types of popular time series queries. These include single-groupby queries (aggregating metrics for specific hosts over time ranges), double-groupby queries (aggregating across all hosts), and heavy analytical queries.

All query benchmarks run with a single worker against the 4,000-host dataset (69 million rows).

Single-Groupby Queries

These queries aggregate CPU metrics for random hosts over specified time ranges.

↓ Lower is better

Query format: metrics-hosts-hours

| Query | QuestDB (mean) | TimescaleDB (mean) | QuestDB Advantage |

|---|---|---|---|

| 1 metric, 1 host, 1h | 1.07 ms | 1.07 ms | Similar |

| 1 metric, 1 host, 12h | 1.73 ms | 5.49 ms | 3.2x faster |

| 1 metric, 8 hosts, 1h | 1.47 ms | 3.62 ms | 2.5x faster |

| 5 metrics, 1 host, 1h | 1.01 ms | 1.15 ms | Similar |

| 5 metrics, 1 host, 12h | 1.84 ms | 5.58 ms | 3.0x faster |

| 5 metrics, 8 hosts, 1h | 1.47 ms | 3.67 ms | 2.5x faster |

For simple point queries, both databases perform similarly. However, as query complexity increases (longer time ranges, more hosts), QuestDB's advantage becomes clear with 2.5x to 3.2x better performance.

Double-Groupby Queries

These queries aggregate across ALL 4,000 hosts, grouped by host and 1-hour intervals - a common analytical workload.

↓ Lower is better

Aggregates across ALL 4,000 hosts, grouped by host and 1-hour intervals

| Query | QuestDB (mean) | TimescaleDB (mean) | QuestDB Advantage |

|---|---|---|---|

| 1 metric, all hosts | 33.84 ms | 547 ms | 16x faster |

| 5 metrics, all hosts | 43.59 ms | 789 ms | 18x faster |

| 10 metrics, all hosts | 56.80 ms | 1,135 ms | 20x faster |

For double-groupby queries, QuestDB is 16x to 20x faster than TimescaleDB, showcasing its strength in analytical workloads with multiple aggregations.

Heavy Analytical Queries

The high-cpu-all query performs a full table scan finding hosts with CPU

utilization above a threshold.

high-cpu-all query latency · ↓ Lower is better

Full table scan finding hosts with CPU utilization above threshold

| Query | QuestDB (mean) | TimescaleDB (mean) | QuestDB Advantage |

|---|---|---|---|

| high-cpu-all | 979 ms | 1,082 ms | 1.1x faster |

For heavy analytical queries scanning large portions of the dataset, both databases perform comparably, with QuestDB maintaining a slight edge.

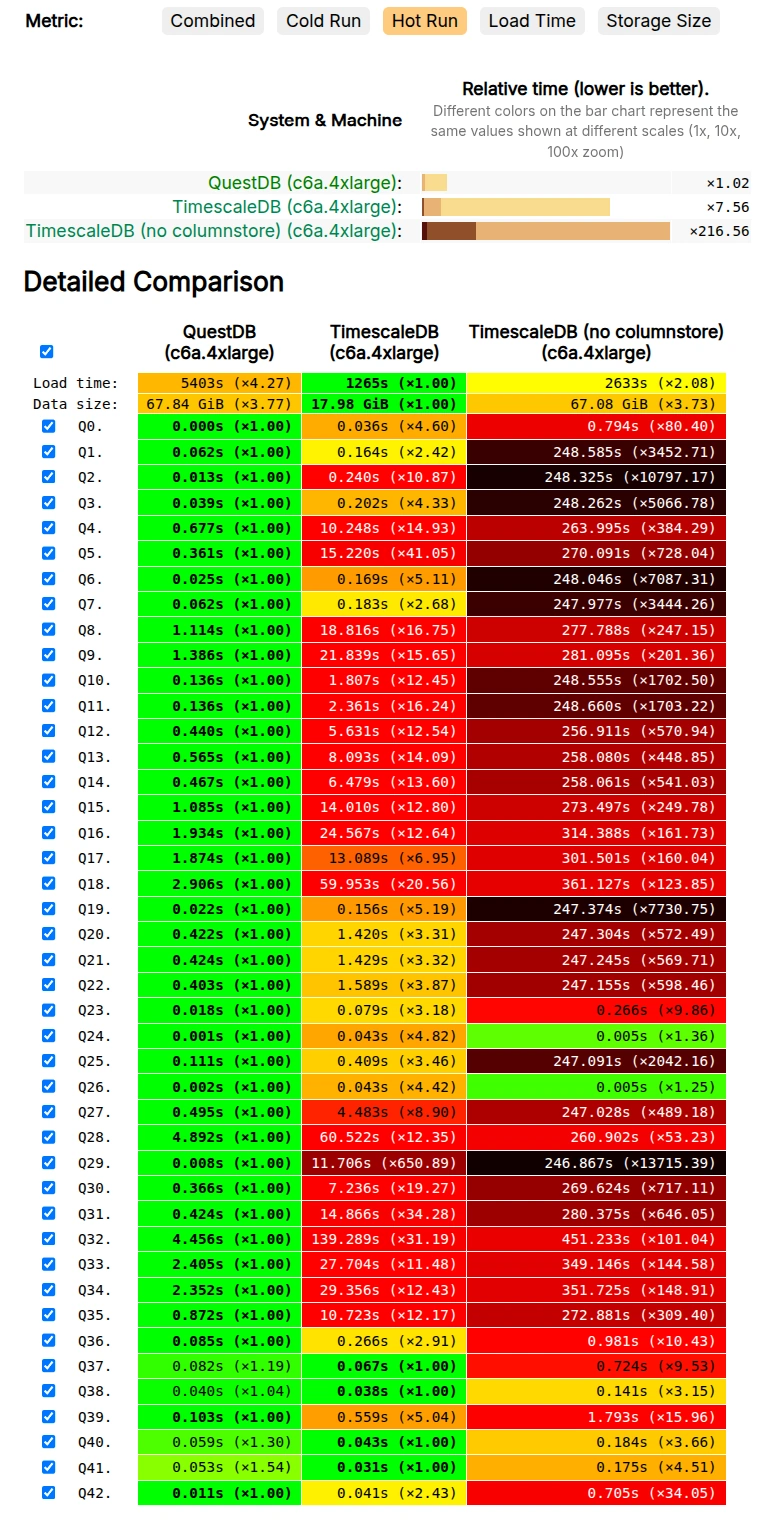

"OLAP" query benchmarks

Although time series databases are optimized for time-based queries, established "Online Analytical Processing (OLAP)" benchmarks cast a net wider. One of such well-known benchmarks is the ClickBench benchmark.

Across most queries, QuestDB outperforms Timescale by a factor of 10x up to 650x on the same c6a.4xlarge AWS EC2 instance type:

Architecture overview

The results tell a story.

But what's under the hood that leads to these outcomes?

TimescaleDB overview

TimescaleDB extends PostgreSQL's row-based architecture with a hybrid row-columnar storage engine called Hypercore. Recent data is stored in row format for fast inserts, while older data is automatically converted to columnar format with compression. This contrasts with the purely columnar architectures of DuckDB, InfluxDB 3.0, and QuestDB, which store all data in columnar format from the start.

It leverages SQL's capabilities, including joins, secondary and partial indexes, and integrates with other extensions like PostGIS, enhancing PostgreSQL's performance for time series data. However, its performance comparisons are relative to PostgreSQL rather than specialized time series databases. As such, TimescaleDB's PostgreSQL compatibility is a double-edged sword.

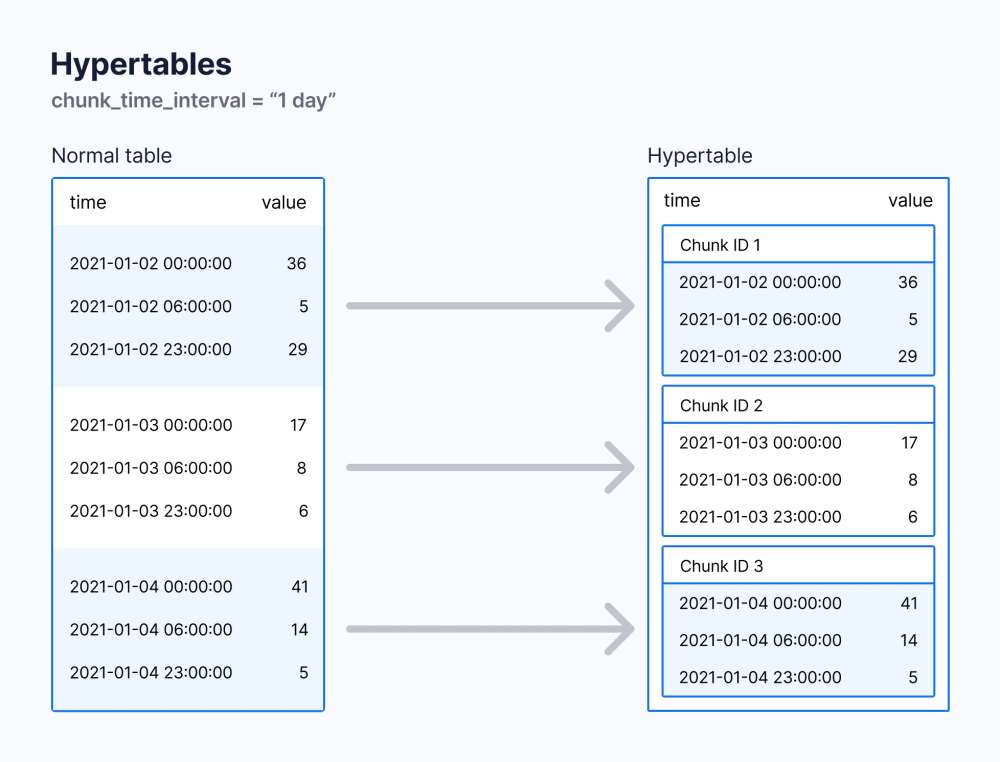

TimescaleDB uses "hypertables" to manage large datasets, automatically partitioning them into "chunks" based on time intervals and - optionally - space. These chunks, essentially smaller tables, store data within specific time frames, facilitating efficient data management.

Timescale's own best practices recommend sizing chunks to balance memory efficiency with query performance, avoiding overly small chunks that can degrade query planning and compression.

A constraint inherited from PostgreSQL is that any unique index or primary key on a hypertable must include the time partitioning column. This is because PostgreSQL can only enforce uniqueness within each partition (chunk) individually. While this doesn't affect tables without unique constraints, it can complicate schema design when global uniqueness is required—often forcing composite keys that include the timestamp.

Furthermore, while Hypercore's columnar compression helps with storage and analytical queries on older data, incoming writes still go through PostgreSQL's row-based format (B-Tree + heap file) before conversion—adding latency compared to databases that write directly to columnar storage.

All told, when compared to Postgres itself, Timescale adds a powerful time series dimension. However, when down in the "deep end" of time series, the core limitations become more clear.

QuestDB architecture

QuestDB is positioned as a high-performance, open-source time series database (TSDB) developed using low-latency (zero-GC) Java, C++, and Rust. It combines the convenience of the InfluxDB Line Protocol with SQL's familiarity, ensuring users enjoy both flexibility and ease when querying and a tried-and-true protocol for high-volume ingestion.

At its core, QuestDB implements a three-tier columnar storage architecture optimized for both high-throughput ingestion and fast analytical queries:

Tier 1 - Write-Ahead Log (WAL): Incoming writes land in a WAL for durability. The WAL buffers and sorts out-of-order data before committing to storage, enabling millions of rows per second without sacrificing data integrity.

Tier 2 - Columnar partitions: Data is organized into time-partitioned columnar files. Each partition stores columns in separate files, enabling efficient compression, selective column reads, and parallel SIMD-accelerated scans.

Tier 3 - Cold storage (Parquet): Older partitions can be converted to Parquet and moved to object storage (S3, Azure Blob, GCS). Queries transparently span all tiers, keeping hot data fast while reducing storage costs.

Tier One: Hot ingest (WAL), durable by default

Incoming data is appended to the write-ahead log (WAL) with ultra-low latency. Writes are made durable before any processing, preserving order and surviving failures without data loss. The WAL is asynchronously shipped to object storage, so new replicas can bootstrap quickly and read the same history.

Tier Two: Real-time SQL on live data

Data is time-ordered and de-duplicated into QuestDB's native, time-partitioned columnar format and becomes immediately queryable. Power real-time analysis with vectorized, multi-core execution, streaming materialized views, and time-series SQL (e.g., ASOF JOIN, SAMPLE BY). The query planner spans tiers seamlessly.

Tier Three: Cold storage, open and queryable

Older data is automatically tiered to object storage in Apache Parquet. Query it in-place through QuestDB or use any tool that reads Parquet. This delivers predictable costs, interoperability with AI/ML tooling, and zero lock-in.

Unlike TimescaleDB's hypertable architecture built on PostgreSQL's row-based storage, QuestDB stores all time series in a single columnar table structure. This means adding new series (high cardinality) doesn't create additional storage overhead—the same columnar files simply contain more rows. This architectural difference explains why QuestDB maintains consistent performance as cardinality scales.

QuestDB is built on open formats—Parquet today, with Iceberg and Arrow on the roadmap—keeping your data portable and free from vendor lock-in. While TimescaleDB ties data to PostgreSQL's storage format, QuestDB still benefits from the PostgreSQL ecosystem via PGWire connectivity.

In developing QuestDB from the ground up, the team focused on:

-

Performance: Built for high-throughput and high-cardinality workloads. Query speeds are accelerated via SIMD instructions, a custom JIT compiler for parallel filters, and time-partitioned storage.

-

Flexibility: Schema-less ingestion allows dynamic column addition. Concurrent schema changes don't compromise writes.

-

Compatibility: Supports InfluxDB Line Protocol (extended for arrays), PGWire, REST API, CSV, and Parquet import/export. High-performance client libraries available in multiple languages.

-

Enhanced SQL: Time series-specific SQL extensions simplify querying temporal data.

TimescaleDB limitations

Both databases have their strengths and so too, each has its own limitations. We'll start with TimescaleDB.

Temporal JOINs and missing time-based extensions

Timescale does not support joining tables based on the nearest timestamp, also known as ASOF JOIN. These joins are heavily used and relied upon in financial markets.

TimescaleDB also lacks some easy ways to manipulate time series data. For example, retrieving the latest data for a given attribute/channel in the data (such as currency pair or sensor type) involves computing expensive lateral joins.

Check out the difference:

- QuestDB

- PostgreSQL and Timescale

- DuckDB

-- QuestDB provides ASOF JOIN. Since table definition includes-- the designated timestamp column, no time condition is neededSELECT t.*, n.*FROM trades ASOF JOIN news ON (symbol);

Additionally, there are no built-in functions to deal with periodic intervals. Timescale does however offer continuous aggregate views. These views pre-aggregate data into summary tables, optimizing queries that compute statistics over fixed time intervals.

Schema change overhead

Schema adjustments in TimescaleDB, though possible through

ALTER TABLE ADD COLUMN operations, are not without their drawbacks. These

modifications increase in pain with the scale of the database, primarily due to

the extensive re-indexing required by PostgreSQL for both old and new rows.

Ingestion performance

TimescaleDB's performance tuning requires balancing index usage, foreign key constraints, and unique keys—each adds overhead that impacts insert speed. Optimizing for time series workloads demands careful trade-offs between data integrity features and ingestion throughput.

High cardinality data

TimescaleDB's ingestion rate degrades with high-cardinality datasets due to its row-based ingestion and heavy indexing. The recommended mitigation—minimizing indices, foreign keys, and unique keys—runs counter to PostgreSQL's traditional strengths.

PostgreSQL extension

Installing TimescaleDB requires PostgreSQL first, then the extension, then time-series-specific tuning. QuestDB is dependency-free and ready out of the box. Less of an issue on managed cloud platforms, but adds maintenance overhead when self-hosting.

QuestDB limitations

Next, for QuestDB.

Type of workload & use case

TimescaleDB, built on PostgreSQL's row-based architecture, supports OLTP operations (INSERT, UPSERT, UPDATE, DELETE, triggers). QuestDB is columnar and optimized for OLAP and time series workloads—high ingest throughput, fast queries, and time-based analytics common in financial services, IoT, and Ad-Tech.

QuestDB is not designed for OLTP and is less suited for log monitoring use cases.

Weaker PostgreSQL compatibility

QuestDB supports PGWire, enabling use with most PostgreSQL libraries. However, it only supports forward-only cursors—not scrollable cursors via DECLARE CURSOR and FETCH. Some drivers like psycopg2 rely on scrollable cursors and may not be fully compatible.

Ecosystem maturity

Timescale (2016) has a head start over QuestDB (2019), resulting in a wider array of third-party integrations. QuestDB's ecosystem is growing but remains smaller.

Closed source features

Several additional QuestDB features are closed-source under a proprietary license. These include replication, role-based access control, TLS, and compression. This is also true for Timescale.

Conclusion

As with all databases, choosing the "perfect" one depends on your business requirements, data model, and use case.

TimescaleDB is a natural fit for existing PostgreSQL users. However, being built on top of PostgreSQL it inherits row-based limitations that affect time series and analytical workloads. The benchmark results reflect these architectural differences.

QuestDB is less mature as an overall ecosystem. But if performance is of any concern, then it's available as an attractive, developer-friendly database for demanding workloads. The community is active, enthusiastic, and growing rapidly.

Many marketing pages make grand performance claims. But until your prospective database contains your own data and is hosted in your own infrastructure, you just won't know.

To get started, try our live demo, download QuestDB, or explore QuestDB Enterprise for production deployments.