Deploying to Google Cloud Platform (GCP)

Hardware recommendations

CPU/RAM

A production instance for QuestDB should be provisioned with at least 4 vCPUs and 8 GiB of memory. If possible,

a 1:4 vCPU/RAM ratio should be used. Some use cases may benefit from a 1:8 ratio, if this means that all the working

set data will fit into memory; this can increase query performance by as much as 10x.

It is not recommended to run production workloads on less than 4 vCPUs, as below this number, parallel querying optimisations

may be disabled. This is to ensure there is sufficient resources available for ingestion.

Architecture

QuestDB should be deployed on Intel/AMD architectures i.e. x86_64 and not on ARM i.e. aarch64. Some optimisations are not available

on ARM, e.g. SIMD.

Storage

Data should be stored on a data disk with at minimum 3000 IOPS/125 MBps, and ideally at least 5000 IOPS/300 MBps. Higher end workloads should scale up the disks appropriately, or use multiple disks if necessary.

It is also worth checking the burst capacity of your storage. This capacity should only be used during heavy I/O spikes/infrequent out-of-order (O3) writes. It is useful to have in case you hit unexpected load bursts.

Google Compute Engine with Google Cloud Hyperdisk

Google Compute Engine offers a variety of VM instances tuned for different workloads.

Do not use instances containing the letter A, such as C4A. These are ARM architecture instances,

using Axion processors.

Either AMD EPYC CPUs (D letter) or Intel Xeon (no letter) are appropriate for x86_64 deployments.

We recommend starting with C-Series instances, and reviewing other instance types if your workload demands it.

You should deploy using an x86_64 Linux distribution, such as Ubuntu.

For storage, we recommend using Hyperdisk Balanced disks,

and provisioning them at 5000 IOPS/300 MBps until you have tested your workload.

Hyperdisk Extreme generally requires much higher vCPU counts - for example, it cannot be used on C3 machines

smaller than 88 vCPUs.

For the file system, use zfs with lz4, as this will compress your data. If compression

is not a concern, then use ext4 or xfs for a little higher performance.

Google Filestore

Google Filestore is a NAS solution offering an NFS API to talk to arbitrary volumes.

This should not be used as primary storage for QuestDB. It could be used for replication in QuestDB Enterprise,

but Google Cloud Storage is likely simpler and cheaper to use.

Google Cloud Storage

QuestDB supports Google Cloud Storage as its replication object-store in the Enterprise edition.

To get started, create a bucket for the database to use. Then follow the Enterprise Quick Start steps to create a connection string and configure QuestDB.

Minimum specification

- Instance:

c3-standard-4orc3d-standard-4(4 vCPUs, 16 GB RAM) - Storage

- OS disk:

Hyperdisk Balanced (30 GiB)volume provisioned with3000 IOPS/140 MBps. - Data disk:

Hyperdisk Balanced (100 GiB)volume provisioned with3000 IOPS/140 MBps.

- OS disk:

- Operating System:

Linux Ubuntu 24.04 LTS x86_64. - File System:

ext4

Better specification

- Instance:

c3-highmem-8orc3d-highmem-8(8 vCPUs, 64 GB RAM) - Storage

- OS disk:

Hyperdisk Balanced (30 GiB)volume provisioned with5000 IOPS/300 MBps. - Data disk:

Hyperdisk Balanced (300 GiB)volume provisioned with5000 IOPS/300 MBps.

- OS disk:

- Operating System:

Linux Ubuntu 24.04 LTS x86_64. - File System:

zfs

You can use the highcpu and highmem variants to adjust the standard 4:1 RAM/vCPU

ratio to 2:1 or 8:1 respectively. Higher RAM can improve performance dramatically

if it means your working set data will fit entirely into memory.

Launching QuestDB on Google Compute Engine

This guide describes how to run QuestDB on a new Google Cloud Platform (GCP) Compute Engine instance. After completing this guide, you will have an instance with QuestDB running in a container using the official QuestDB Docker image, as well as a network rule that enables communication over HTTP and PostgreSQL wire protocol.

Prerequisites

- A Google Cloud Platform (GCP) account and a GCP Project

- The Compute Engine API must be enabled for the corresponding Google Cloud Platform project

Create a Compute Engine VM

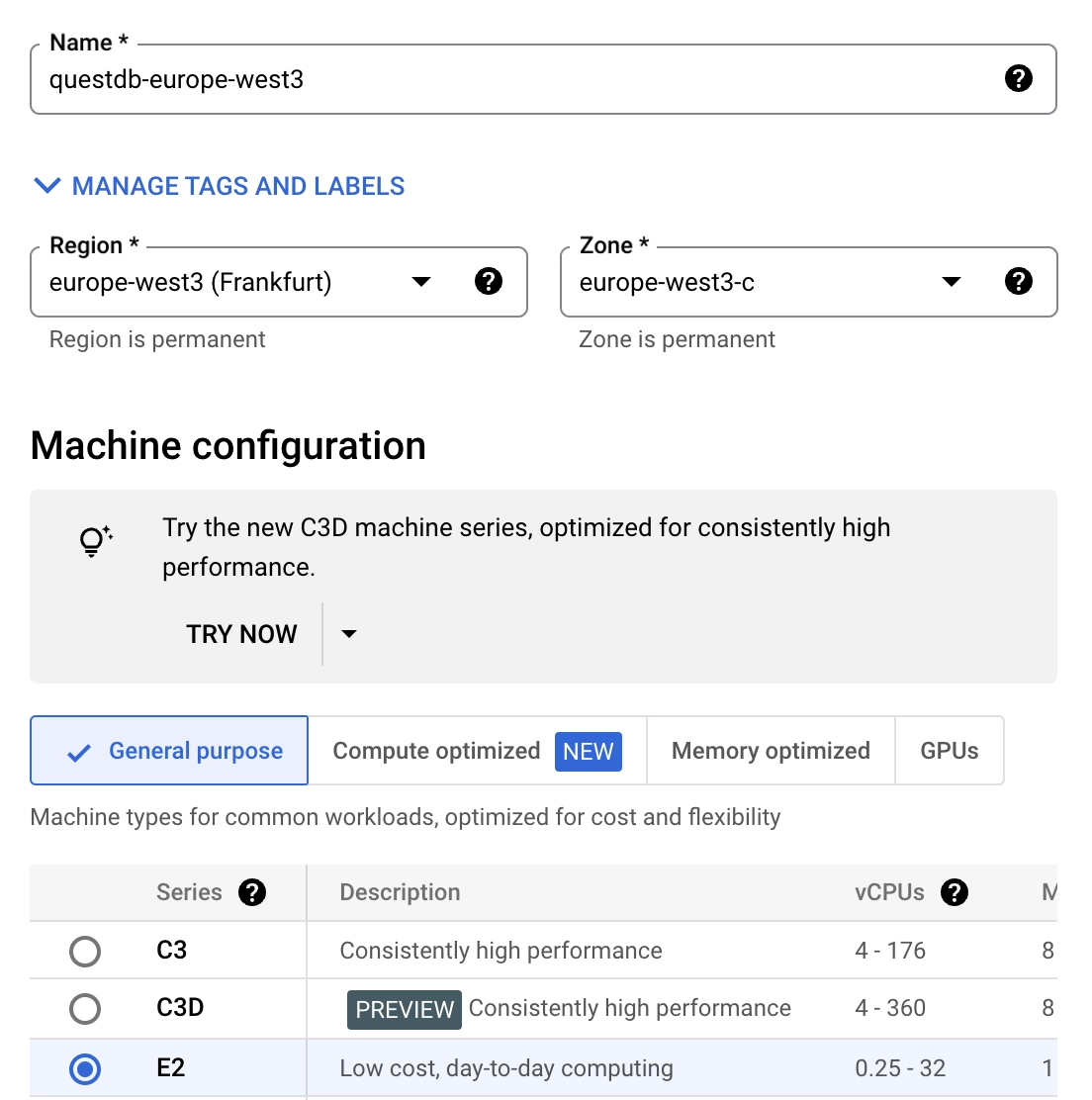

- In the Google Cloud Console, navigate to Compute Engine and click Create Instance

-

Give the instance a name - this example uses

questdb-europe-west3 -

Choose a Region and Zone where you want to deploy the instance - this example uses

europe-west3 (Frankfurt)and the default zone -

Choose a machine configuration. The default choice,

ec2-medium, is a general-purpose instance with 4GB memory and should be enough to run this example.

-

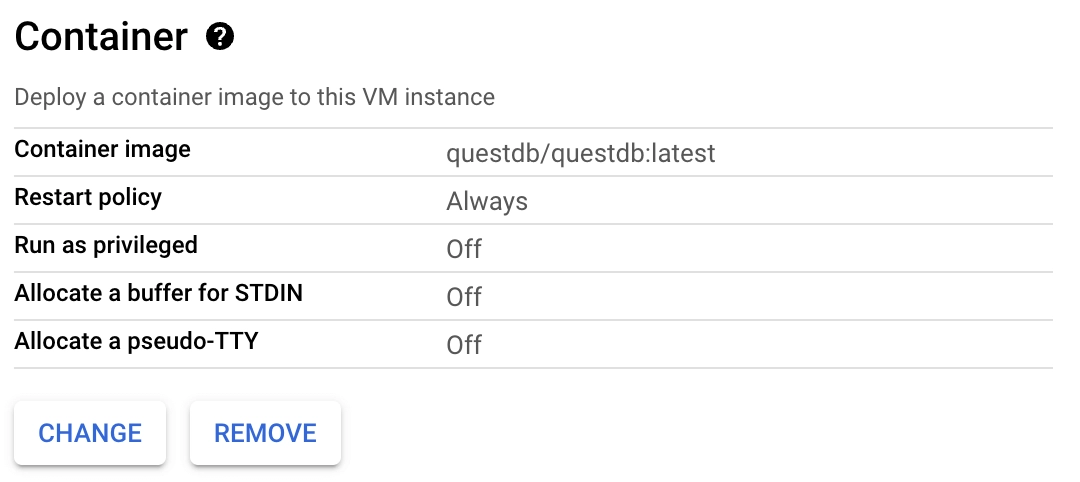

To add a running QuestDB container on instance startup, scroll down and click the Deploy Container button. Then, provide the

latestQuestDB Docker image in the Container image textbox.questdb/questdb:latestClick the Select button at the bottom of the dropdown to complete the container configuration.

Your docker configuration should look like this:

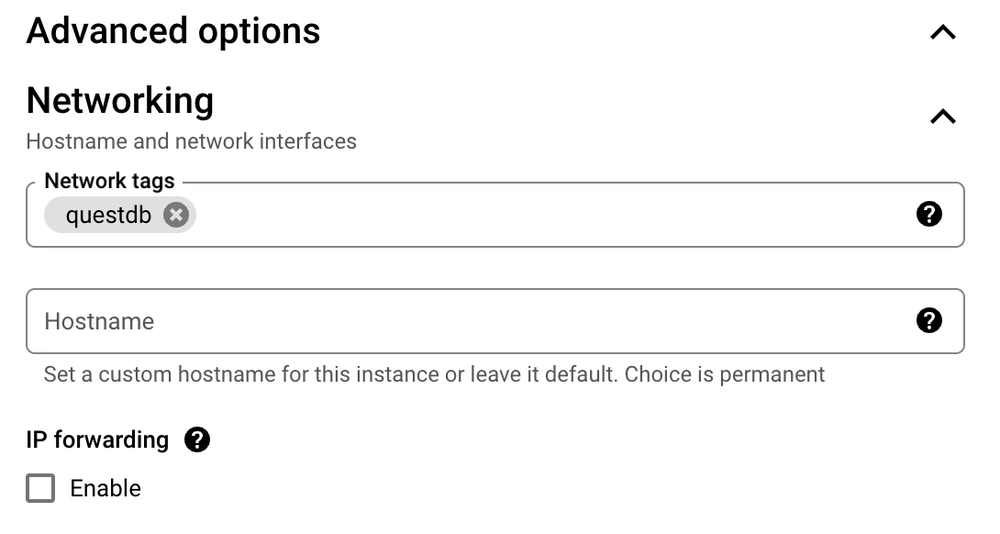

Before creating the instance, we need to assign it a Network tag so that we can add a firewall rule that exposes QuestDB-related ports to the internet. This is required for you to access the database from outside your VPC. To create a Network tag:

- Expand the Advanced options menu below the firewall section, and then expand the Networking panel

- In the Networking panel add a Network tag to identify the instance.

This example uses

questdb

You can now launch the instance by clicking Create at the bottom of the dialog.

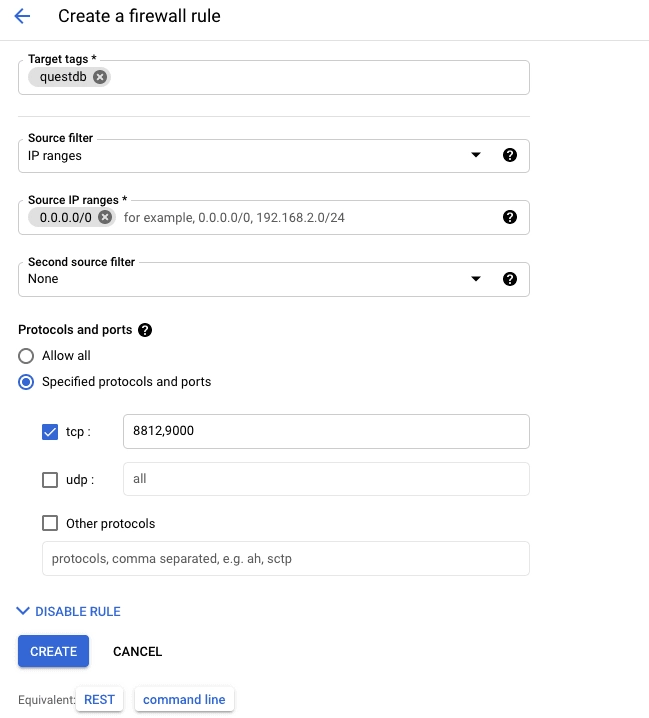

Create a firewall rule

Now that we've created our instance with a questdb network tag, we need to

create a corresponding firewall rule to associate with that tag. This rule will

expose the required ports for accessing QuestDB. With a network tag, we can

easily apply the new firewall rule to our newly created instance as well as any

other QuestDB instances that we create in the future.

- Navigate to the Firewall configuration page under Network Security -> Firewall policies

- Click the Create firewall rule button at the top of the page

- Enter

questdbin the Name field - Scroll down to the Targets dropdown and select "Specified target tags"

- Enter

questdbin the Target tags textbox. This will apply the firewall rule to the new instance that was created above - Under Source filter, enter an IP range that this rule applies to. This

example uses

0.0.0.0/0, which allows ingress from any IP address. We recommend that you make this rule more restrictive, and naturally that you include your current IP address within the chosen range. - In the Protocols and ports section, select Specified protocols and

ports, check the TCP option, and type

8812,9000in the textbox. - Scroll down and click the Create button

All VM instances on Compute Engine in this account which have the Network

tag questdb will now have this firewall rule applied.

The ports we have opened are:

9000for the REST API and Web Console8812for the PostgreSQL wire protocol

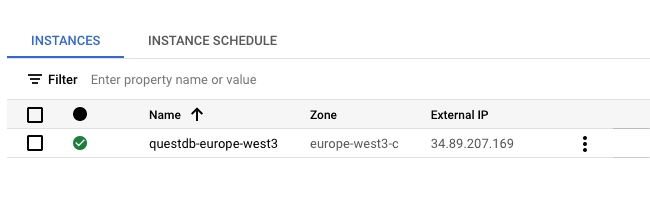

Verify the deployment

To verify that the instance is running, navigate to Compute Engine -> VM Instances. A status indicator should show the instance as running:

To verify that the QuestDB deployment is operating as expected:

- Copy the External IP of the instance

- Navigate to

http://<external_ip>:9000in a browser

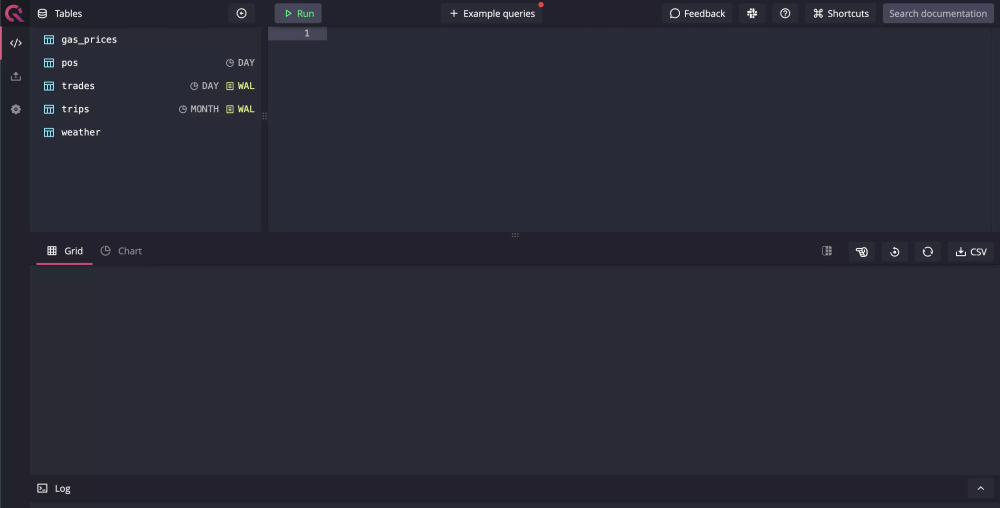

The Web Console should now be visible:

Alternatively, a request may be sent to the REST API exposed on port 9000:

curl -G \

--data-urlencode "query=SELECT * FROM telemetry_config" \

<external_ip>:9000/exec

Set up GCP with Pulumi

If you're using Pulumi to manage your infrastructure, you can create a QuestDB instance with the following:

import pulumi

import pulumi_gcp as gcp

# Create a Google Cloud Network

firewall = gcp.compute.Firewall(

"questdb-firewall",

network="default",

allows=[

gcp.compute.FirewallAllowArgs(

protocol="tcp",

ports=["9000", "8812"],

),

],

target_tags=["questdb"],

source_ranges=["0.0.0.0/0"],

)

# Create a Compute Engine Instance

instance = gcp.compute.Instance(

"questdb-instance",

machine_type="e2-medium",

zone="us-central1-a",

boot_disk={

"initialize_params": {

"image": "ubuntu-os-cloud/ubuntu-2004-lts",

},

},

network_interfaces=[

gcp.compute.InstanceNetworkInterfaceArgs(

network="default",

access_configs=[{}], # Ephemeral public IP

)

],

metadata_startup_script="""#!/bin/bash

sudo apt-get update

sudo apt-get install -y docker.io

sudo docker run -d -p 9000:9000 -p 8812:8812 \

--env QDB_HTTP_USER="admin" \

--env QDB_HTTP_PASSWORD="quest" \

questdb/questdb

""",

tags=["questdb"],

)

# Export the instance's name and public IP

pulumi.export("instanceName", instance.name)

pulumi.export("instance_ip", instance.network_interfaces[0].access_configs[0].nat_ip)