Leveraging LLMs to Interact with QuestDB Data

Thanks to Large Language Models (LLMs), interacting with your data is easier than ever. Previously, you needed to know SQL, a coding language like Python, or some Business Intelligence (BI) tool to query and analyze your data stored away in some database. But now, you can simply ask questions in plain English, and let the LLM contextualize that data for you.

Every day, new advancements in AI are lowering this barrier further. At first, one would need to ask an LLM to write out a SQL query to translate prompts like "show me the average price of Bitcoin in the last five days" and copy-paste that into a dashboard or BI tool. Then came Model Context Protocols (MCPs), which standardized how LLMs can retrieve information from external sources that the model was not trained on. Once the MCP server is connected, you can now interact with the database directly from your LLM interface (whether that be your terminal, IDE, or LLM application) instead of having to switch context. And now, we are seeing semi-autonomous agents automating more and more of this away.

In this article, we'll explore how to leverage LLM tools like Claude to interact with QuestDB. We'll first look at utilizing QuestDB's built-in REST API as well as using an OSS PostgreSQL MCP server. Let's explore how these two approaches differ in philosophy and how we can leverage either path based on the use case.

TIP

You can follow along by using the example prompts to any of the LLM tool of your choice. If you get stuck, you can reference the code snippets for a working demo.

REST API: The Direct HTTP Approach

One of the interesting aspects of QuestDB is that it exposes a REST HTTP API endpoint on port

9000 for querying data. Specifically, it has two endpoints with ?query parameter:

/exp: export SQL query as CSV/exec: runs SQL query and returns results as JSON

Since REST APIs are well understood and widely used, this makes it easy for LLM tools to use established libraries in whatever coding language to query data. Let's use Claude to demonstrate how easy this is. My prompt was:

QuestDB has a REST API endpoint to query data(https://questdb.com/docs/query/overview/#rest-http-api),write up a quick demo to ingest some crypto dataand show how you can interact with it.

It then gave me the following instructions:

- Run QuestDB via Docker:

docker run -p 9000:9000 -p 8812:8812 questdb/questdb

- Install Python dependencies:

pip install requests pandas matplotlib aiohttp

NOTE

The code examples for this post are available at https://github.com/questdb/questdb-llm-interaction-post-examples/tree/main.

- Run the following code:

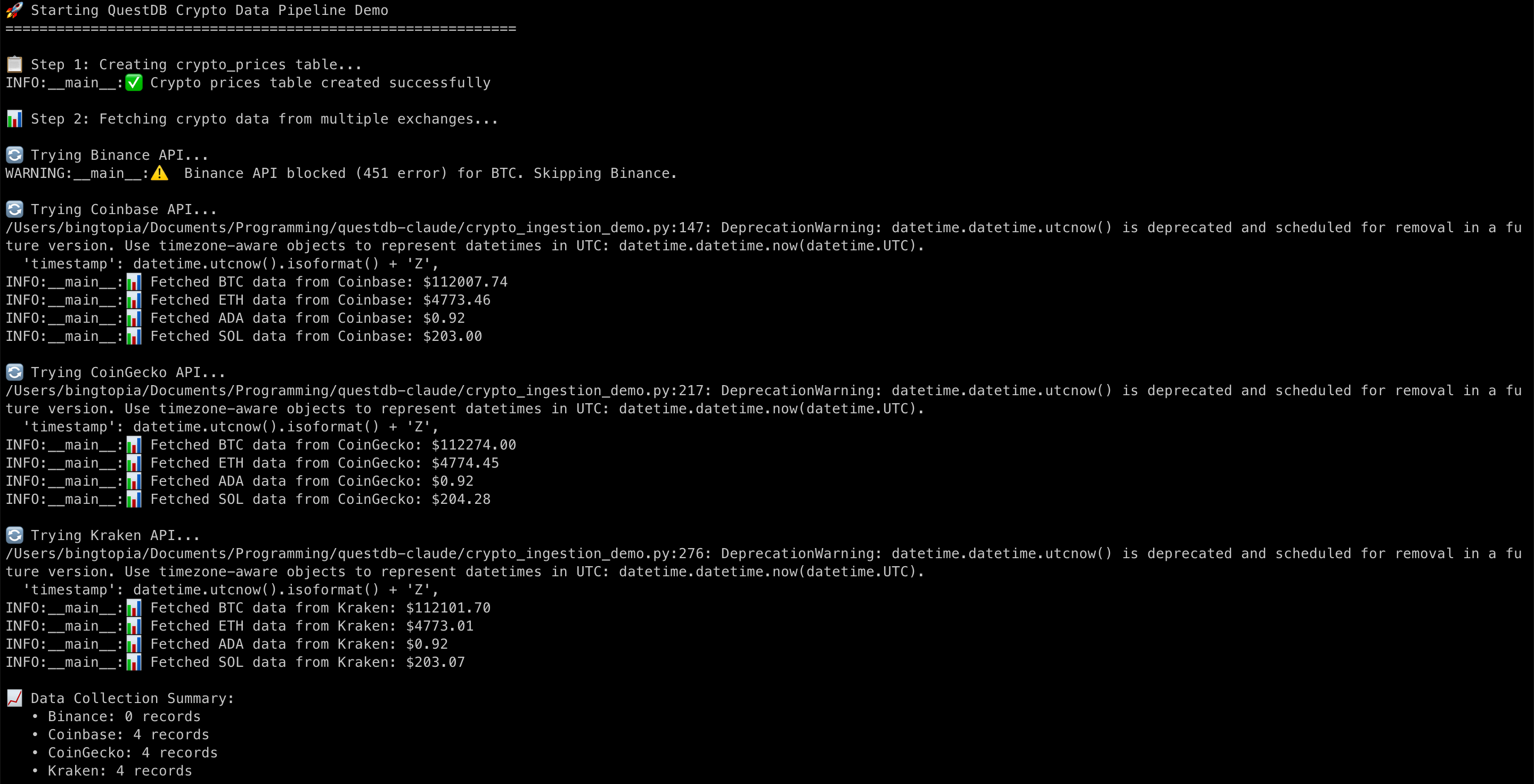

crypto_ingestion_demo.py. At first, it gave me a demo of fetching prices from Binance and Coinbase APIs. Binance was returning451 HTTP error, so I asked it to update and gave me the following:

Once you run that demo code above, we get some records into our database.

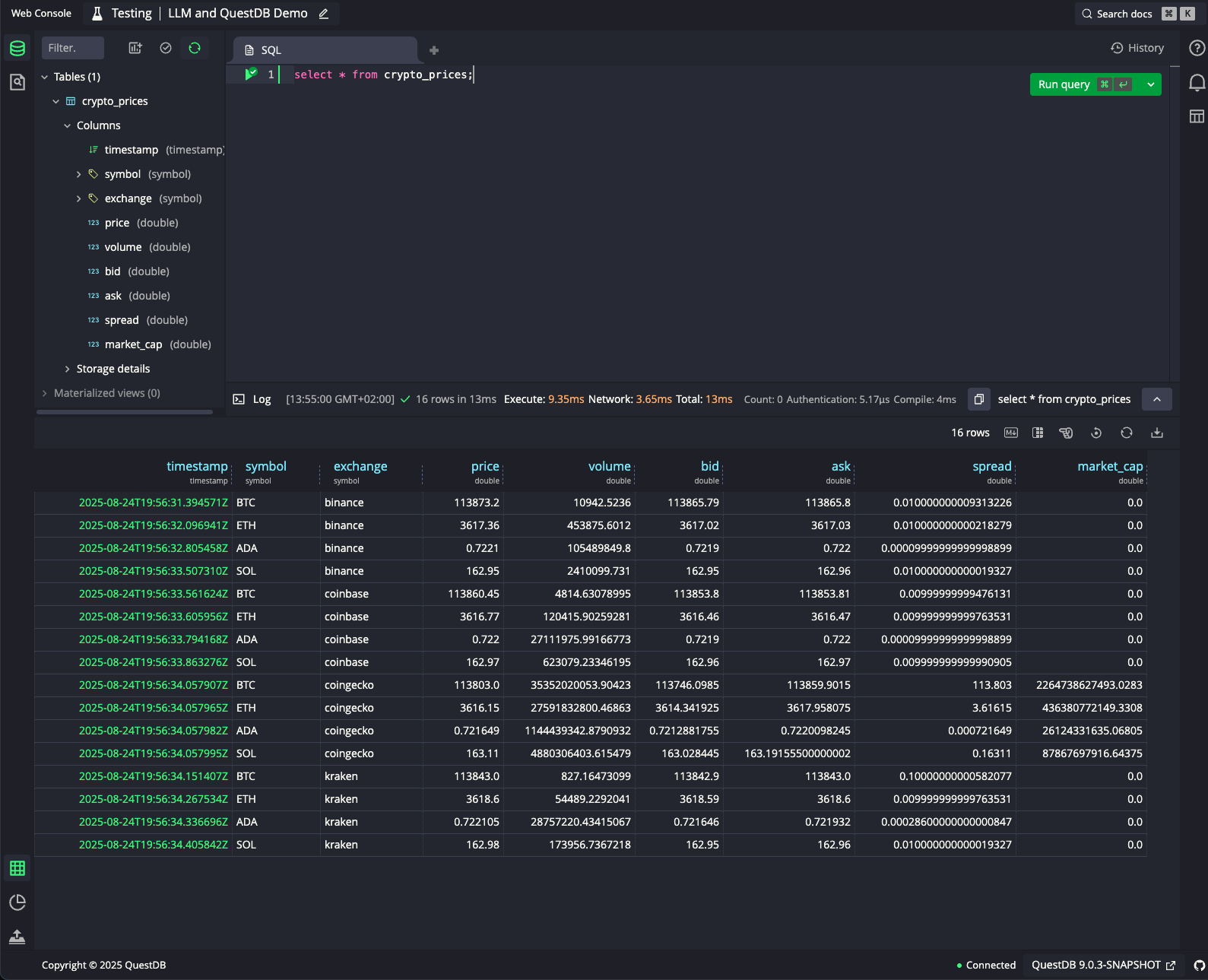

I ran the code a few

times and queried the data via web console at localhost:9000.

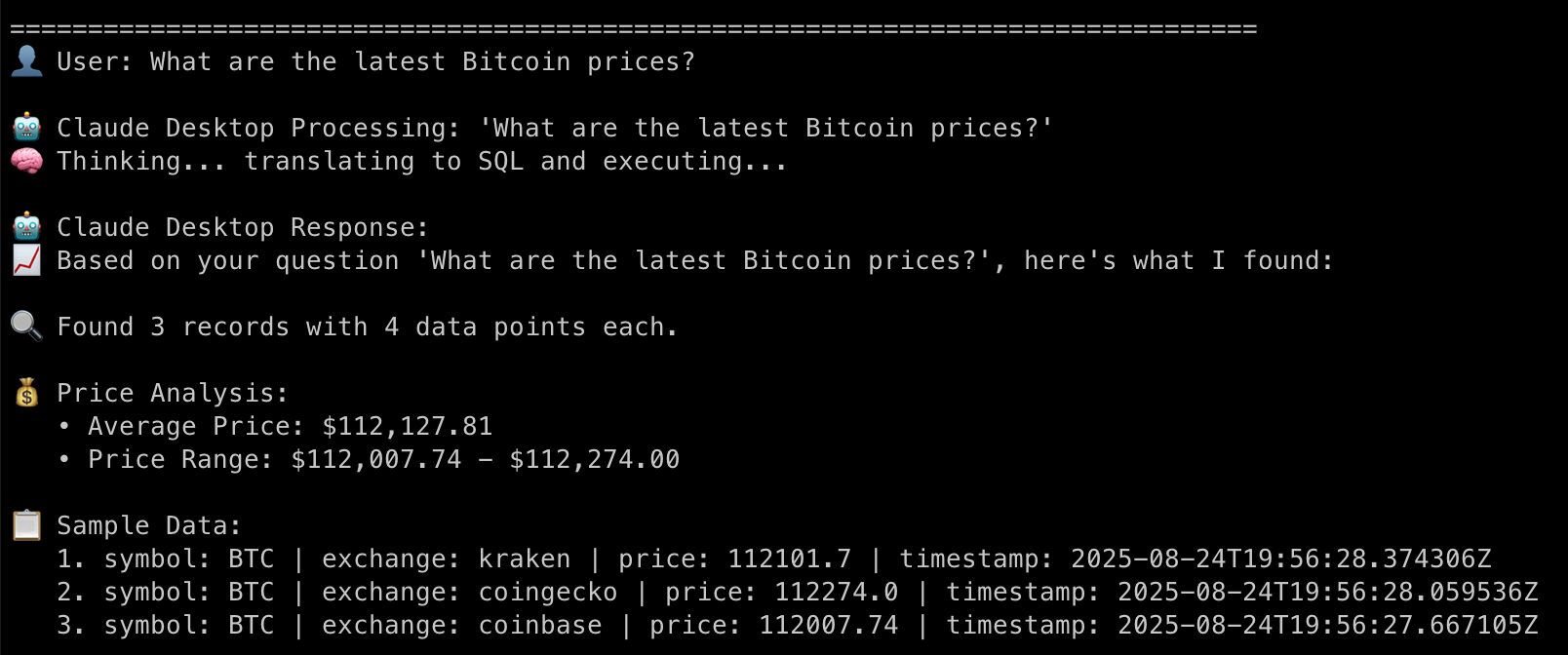

Claude also generated a script to mimic some natural language interaction and some sample

analysis queries in crypto_analyst.py file as shown below:

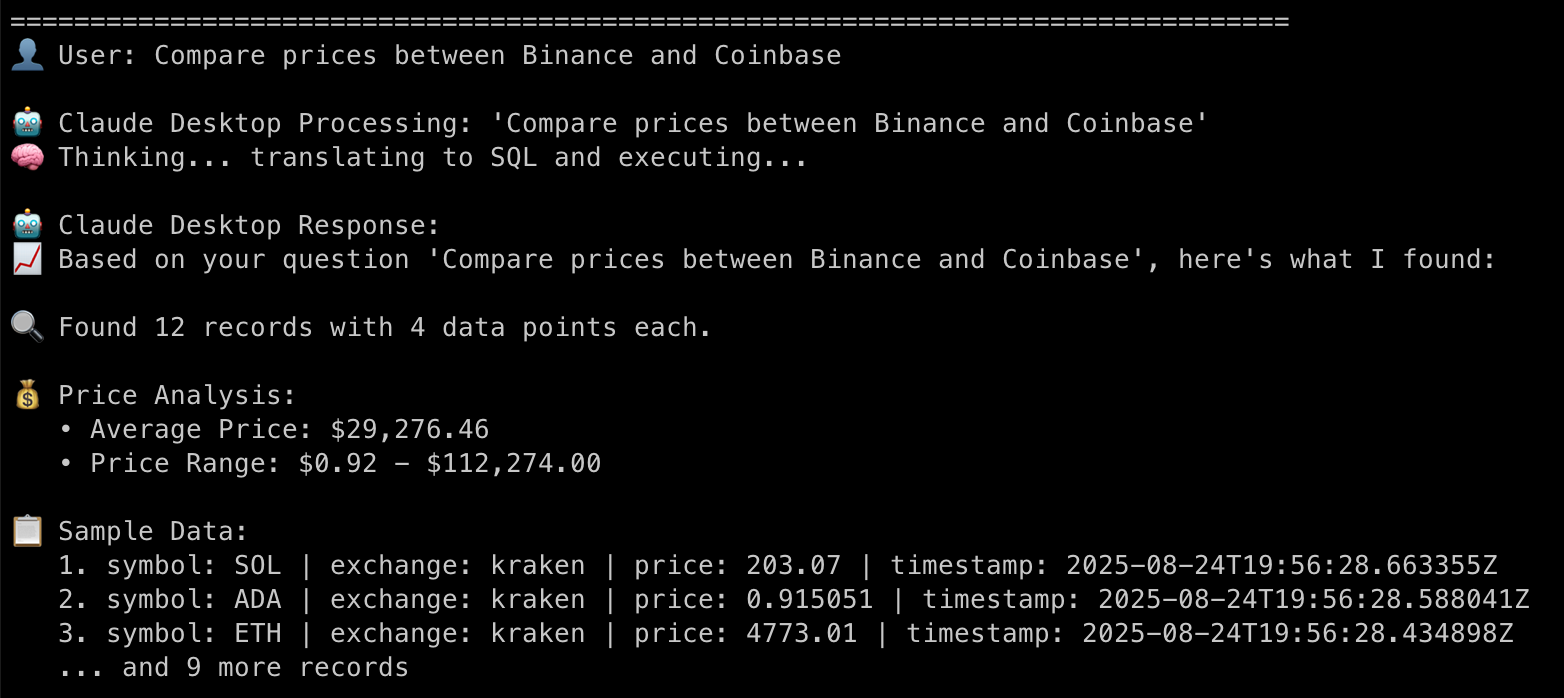

We’ll need bit more data for some more advanced queries like arbitrage opportunities one, but we can at least see hardcoded mappings of user intent to analysis as well as csv exports and graph generation:

As with all LLMs, not everything is perfect but it’s easy to ask Claude to fix itself. For example, hardcoded query of comparing Binance and Coinbase fails because we didn’t actually get any data from Binance and the query just uses Kraken’s data.

Pros and Cons of REST API Approach

As shown by the demo code above, it's extremely easy to generate code samples using the REST API to ingest and query data in QuestDB. The biggest advantage here is that there is no additional infrastructure needed as communication happens directly with QuestDB. Common production-level concerns like authentication, connection pooling, etc., are already solved problems that we need to ask the LLM to implement. Since there's great support for HTTP interaction in almost any programming language, this can be deployed almost anywhere (e.g., locally, web environments, or serverless functions).

However, there are some downsides to this approach. First, we had to map the user intent to queries ourselves in this demo. Otherwise the LLM would have to dynamically do this and generate the REST API call for us with the query. Also, due to the stateless nature of HTTP, we were creating new connections per query and context management (aka "memory") was left for us to implement. This may not be important, but if you want to "chain" queries together or go back to your analysis, this can become cumbersome to implement yourself.

PostgreSQL MCP Server: The Protocol-Native Approach

Now, let's see what the experience is like leveraging a PostgreSQL MCP server. For those unfamiliar with MCP, it is a framework in which AI tools can have structured, persistent access to external systems. You can think of it like REST for AI-native systems. Because QuestDB has a PostgreSQL-compatible interface running on port 8812, we can simply leverage the OSS PostgreSQL MCP server to query data.

If you're using Claude Desktop as an MCP client, you can go to Settings > Developer or

simply find the claude_desktop_config.json file and add the following:

{"mcpServers": {"postgres": {"command": "npx","args": ["@modelcontextprotocol/server-postgres","postgresql://admin:quest@localhost:8812/qdb"]}}}

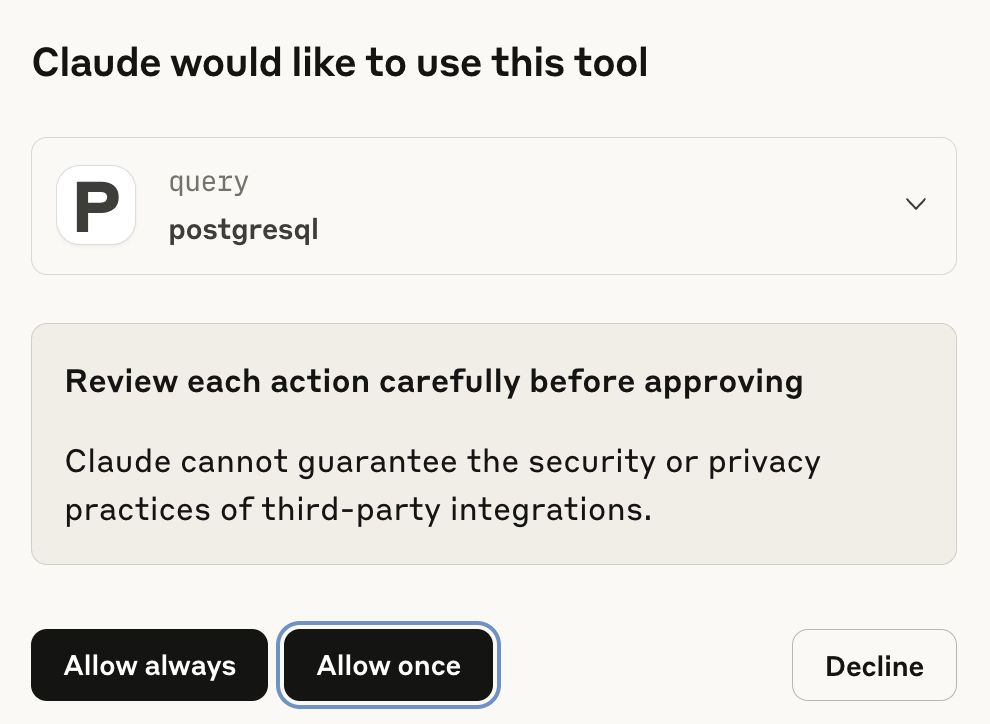

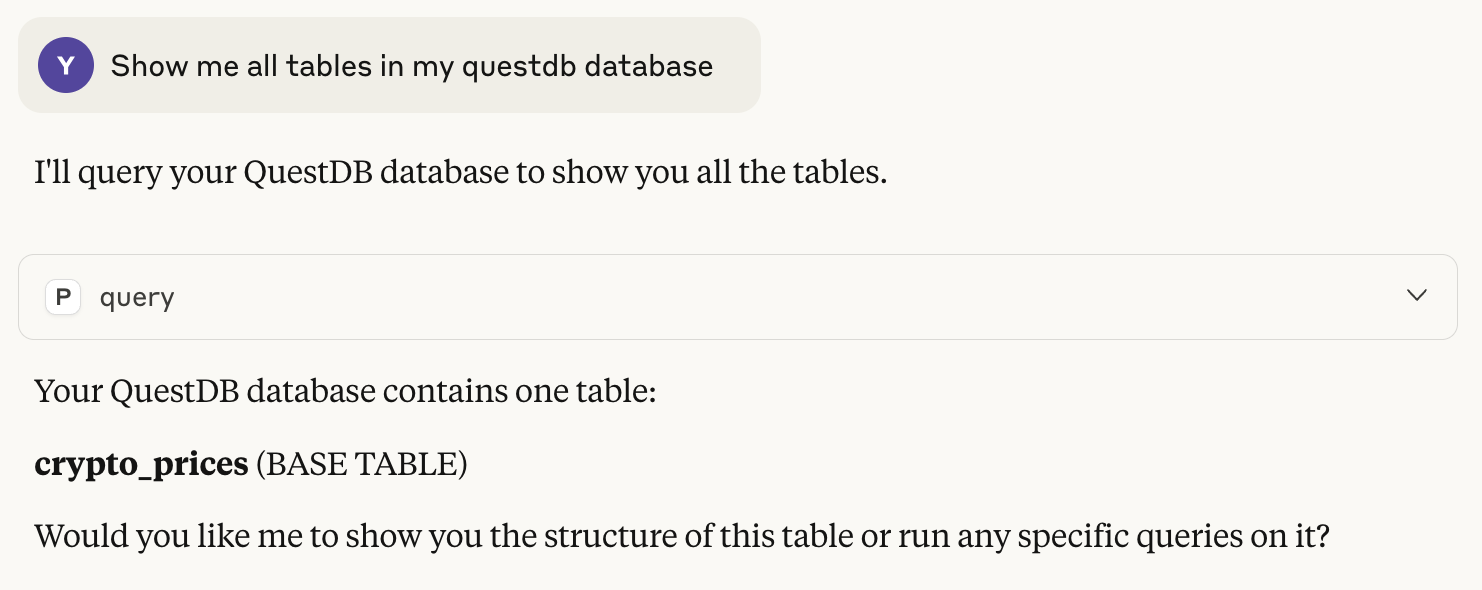

Note: we are using an archived version of the PostgreSQL MCP for demo purposes. You can elect to use Google's GenAI Toolbox integration instead for production purposes. Once you save the config, we need to restart our agent. Then let's ask a question: "Show me all tables in my QuestDB database". It will then prompt you to allow the Postgres interaction.

Then it will return with an answer.

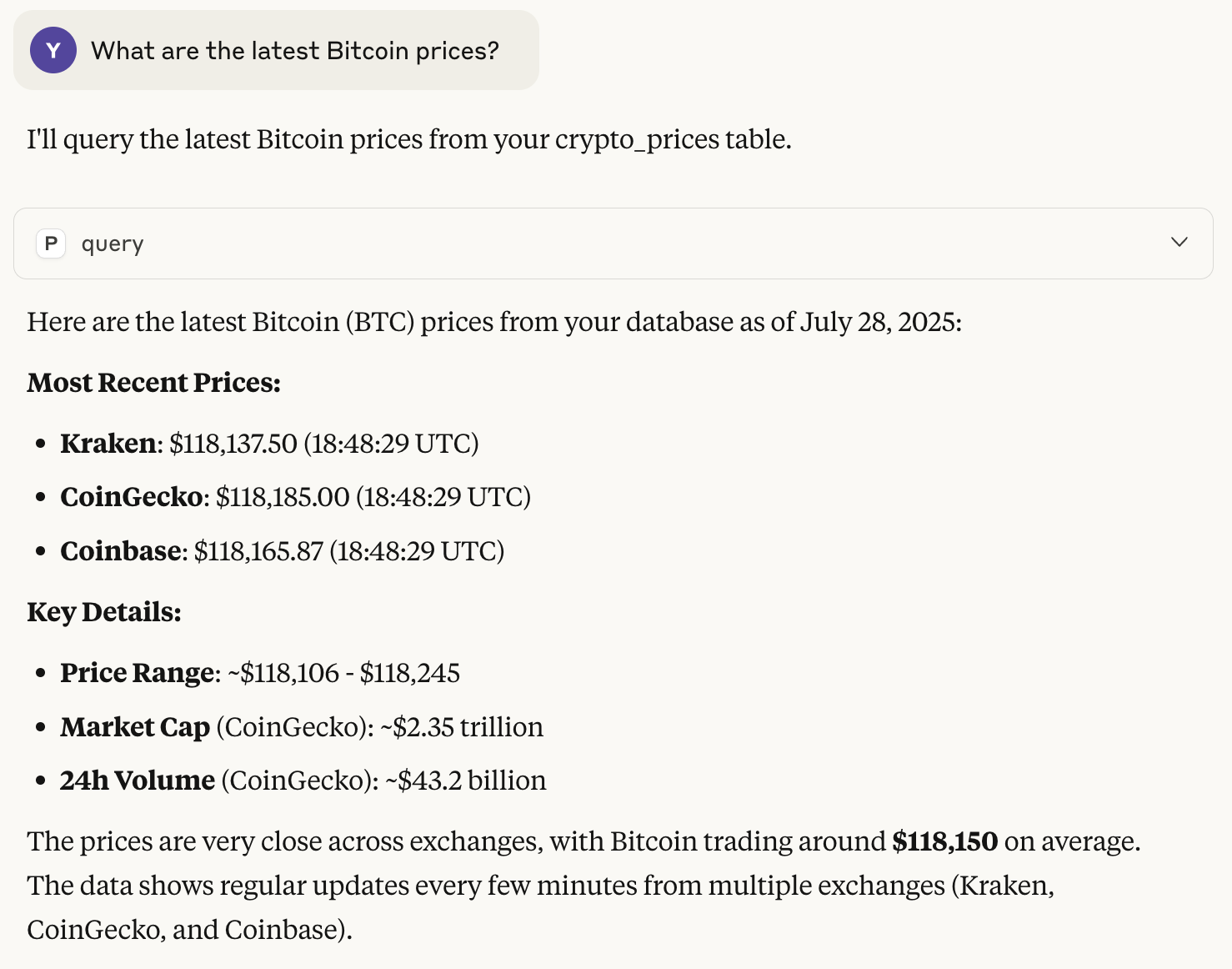

We can now ask the same questions from the REST API demo.

Note that the LLM knew to use our crypto_prices table instead of fetching live prices from

the Internet for this query.

Pros and Cons of PostgreSQL MCP

The two biggest advantages of using PostgreSQL MCP are that

- it can maintain a persistent connection context to build upon previous queries

- it has native schema awareness without having to provide that information ourselves.

With the REST API approach, we needed to know what our data looked like to build the query, but the MCP server exposes that information already to the LLM so we have less work to do on that front.

However, using MCP servers is not without limitations. First off, the technology is still relatively new and changing all the time, as seen by the "archival" status of the PostgreSQL MCP server by the Anthropic team. There are legitimate security and governance questions as we don't have secure MCP registries or gateway solutions yet. Finally, we do need additional infrastructure (i.e., MCP server and client) to run this out of the box. With our local demo, this was simple, but imagine having to productionize this with tight scrutiny from security and legal teams for a financial use case, for example.

Final Thoughts

In this article, we explored two different ways to interact with data on QuestDB. First, we looked at leveraging QuestDB's REST API to ask an LLM tool to generate code for us to both ingest and query that data. Then, we used a PostgreSQL MCP to query data directly from an AI agent and build upon that context.

At the time of writing, the MCP ecosystem is still evolving with lots of promise but not yet to the point of production-level support. There are security concerns like prompt poisoning or even supply chain risks that have yet to be solved widely. Still, MCP servers provide context awareness and other useful features that work well with QuestDB's PostgreSQL wire endpoint out of the box.

The good news is that since QuestDB supports both protocols, we can pick and choose what to use depending on the use case. And as AI tools continue to evolve, the ability to query data using natural language will be increasingly easier. Perhaps the interface of choice will be left to a semi-autonomous agent to decide depending on the performance and risk guidelines we provide in the future.