Automating Workflows in QuestDB: Bash scripts, Dagster, and Apache Airflow

Very often, our community wants to automate common tasks in QuestDB.

For example, maybe they want to detach some folders and move them to object storage, or maybe they want to run some complex queries on a schedule and export the results as CSV. Folks might even drop partitions when some conditions are met, beyond the capabilities of our native table TTL.

To accomplish this, we need a reliable way to automate execution.

In this post, we will explore three ways to automate workflows in QuestDB:

- Bash + Cron: A lightweight and simple way to schedule commands

- Dagster: A modern data orchestrator with strong developer tooling

- Apache Airflow: A mature workflow automation platform widely used in production environments

Each method has its own strengths and trade-offs, and we will highlight them with real examples. You can find all the code for these approaches in our GitHub repository.

Option 1: Bash + Cron

A simple way to automate tasks in QuestDB is to use a Bash script and a cron job. This method is quick to set up and works well for lightweight scheduling needs.

#!/bin/bash# ConfigurationROOT_DIRECTORY="/root/.questdb/db"TABLE_NAME="ecommerce_stats"S3_BUCKET="questdb-javier-demos"S3_KEY_PREFIX="bash-demo"# Calculate the date 21 days agoOLDER_THAN_DATE=$(date -v-21d '+%Y-%m-%d')# Convert and detach partitions older than 21 dayscurl -G "http://localhost:9000/exec" --data-urlencode "query=ALTER TABLE $TABLE_NAME CONVERT PARTITION TO PARQUET WHERE ts < '$OLDER_THAN_DATE'"curl -G "http://localhost:9000/exec" --data-urlencode "query=ALTER TABLE $TABLE_NAME DETACH PARTITION WHERE ts < '$OLDER_THAN_DATE'"# Find and process detached partitionsfind $ROOT_DIRECTORY -type d -name "*.detached" | while read detached_dir; do# Compress Parquet filesfind "$detached_dir" -type f -name "*.parquet" | while read parquet_file; dogzip "$parquet_file"done# Upload to S3aws s3 cp "$detached_dir" "s3://$S3_BUCKET/$S3_KEY_PREFIX/$(basename $detached_dir)/" --recursive# Remove local detached partition directoryrm -rf "$detached_dir"done

All told, the script does the following:

- Sets up

- Calculates the date 21 days ago

- Converts and detaches partitions older than 21 days

- Finds and processes detached partitions

- Compresses Parquet files

- Uploads to S3

- Removes the local detached partition directory

Running with Cron

To run this script daily at midnight, we would add the following line to our crontab:

0 0 * * * /path/to/drop_partitions_older_than_21_days.sh

Pros & Cons of Bash + Cron

✅ Simple to set up.

✅ No dependencies.

✅ Lightweight execution.

❌ No monitoring or error handling.

❌ No built-in retry mechanism.

❌ No backfilling support.

See our Automating QuestDB Tasks guide for more information..

Intro to Workflow Orchestrators

For more advanced automation, workflow orchestrators provide structured execution, error handling, logging, and monitoring. They also allow easy backfilling of missed jobs. Two widely used orchestrators are Dagster and Apache Airflow.

What Are Workflow Orchestrators?

Workflow orchestrators help automate and schedule tasks, with key features such as:

- User interface: Provides visualization of job execution

- Scalability: Supports parallel execution and distributed workloads

- Error control: Handles retries and alerts on failures

- Composability: Enables reuse of the same logic across multiple workflows

- Dynamic configuration: Centralized configuration for parameters and connections

- Backfilling: Re-executes past jobs in case of failures or new data arrivals

We'll cover Dagster and Airflow in the next sections.

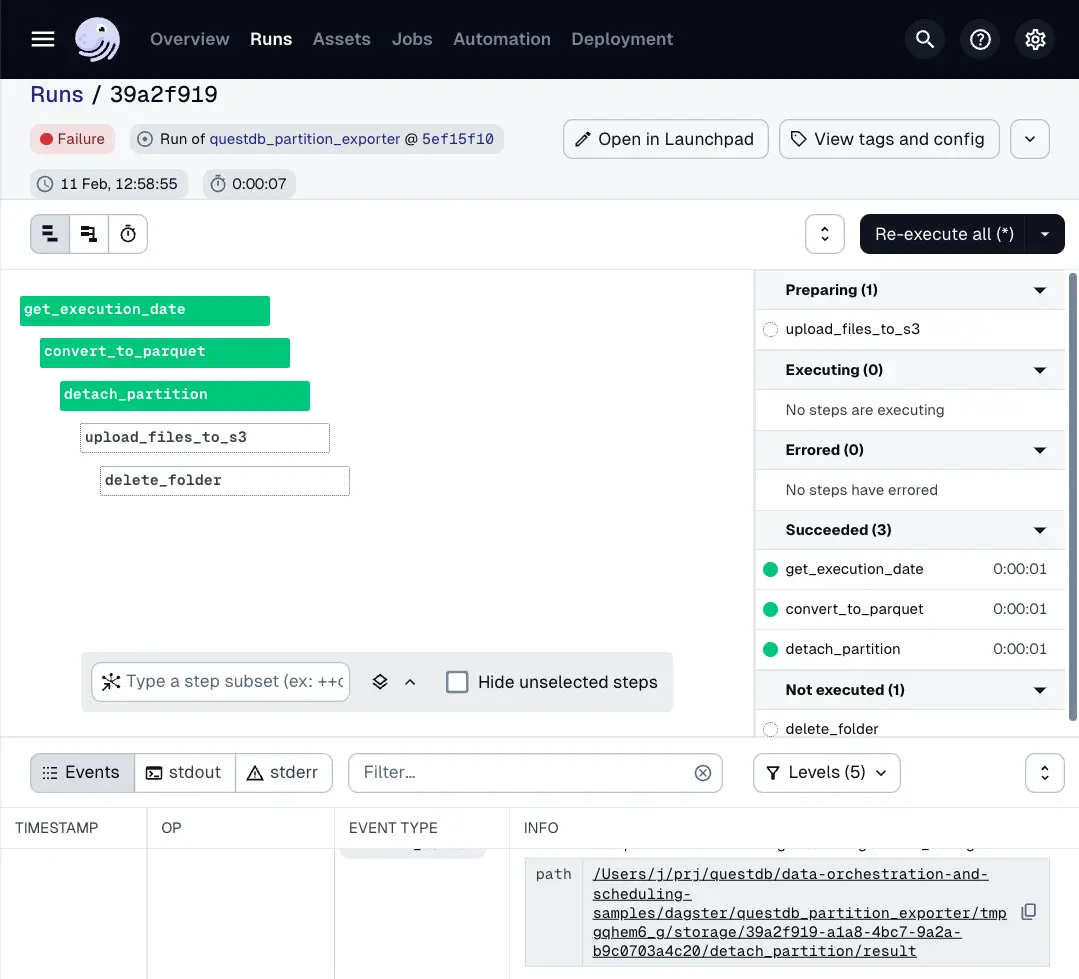

Option 2: Automating with Dagster

Dagster is a modern data orchestrator that focuses on development ergonomics and testability. It allows defining workflows as Python functions and provides strong observability.

Example Dagster Pipeline

Our Dagster implementation interacts with QuestDB using the psycopg PostgreSQL client and manages S3 uploads via boto3. The pipeline performs similar steps to our bash script:

- Converts a partition to Parquet

- Detaches the partition

- Uploads the Parquet files to S3

- Deletes the partition folder

Connecting to QuestDB

We define a postgres_resource to establish a connection using psycopg:

@resource(config_schema={"connection_string": str})def postgres_resource(context):conn = psycopg.connect(context.resource_config["connection_string"])try:yield connfinally:conn.close()

Running SQL Queries

We execute SQL commands directly on QuestDB using:

sql_query = f"""ALTER TABLE ecommerce_statsCONVERT PARTITION TO PARQUETWHERE ts = '{execution_date}'"""with context.resources.postgres.cursor() as cursor:cursor.execute(sql_query)

Running Dagster

-

Install dependencies:

pip install dagster dagster-webserver boto3 psycopg -

Start the UI:

dagster dev -

Launch a job manually from the Dagster UI

Pros & Cons of Dagster

✅ Python-native and easy to use

✅ Good for complex data workflows

✅ Built-in scheduling, monitoring, and UI

❌ Not as widely adopted as Airflow

See our Dagster guide for more information.

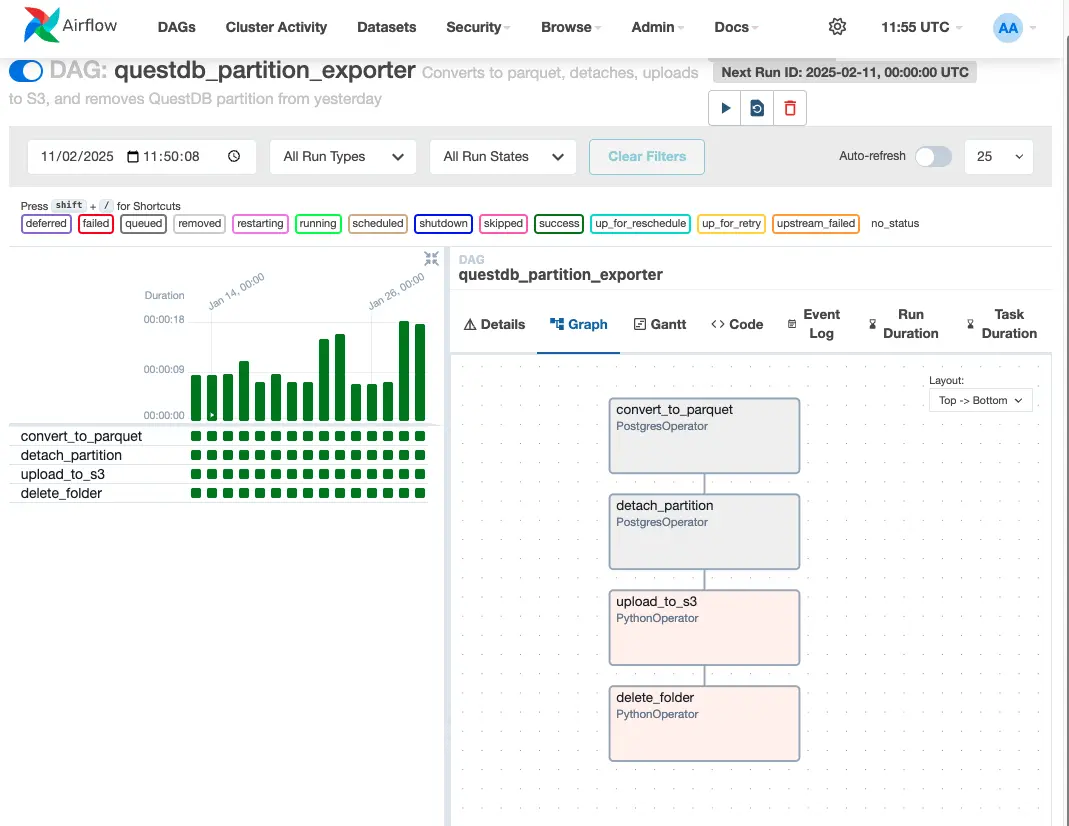

Option 3: Automating with Apache Airflow

Apache Airflow is a widely used workflow automation tool with a powerful UI and extensive integrations. It allows defining DAGs (Directed Acyclic Graphs) that model task dependencies.

Example Airflow DAG

Our Airflow DAG follows the same process as the Dagster pipeline but uses Airflow's built-in PostgreSQL operator to interact with QuestDB:

Connecting to QuestDB

Airflow uses the PostgresOperator to send queries:

convert_to_parquet = PostgresOperator(task_id='convert_to_parquet',postgres_conn_id='questdb',sql="""ALTER TABLE ecommerce_statsCONVERT PARTITION TO PARQUETWHERE ts = '{{ ds }}'""",dag=dag,)

Running Airflow

-

Install Airflow:

pip install apache-airflow -

Start the Airflow UI:

airflow standalone -

Open

http://localhost:8080and trigger the DAG.

Pros & Cons of Airflow

✅ Industry standard for data orchestration.

✅ Scalable with distributed execution.

✅ Extensive plugin support.

❌ More complex setup than Dagster or Bash

See our Airflow guide for more information.

Conclusion

Each approach has its trade-offs:

| Feature | Bash + Cron | Dagster | Airflow |

|---|---|---|---|

| Ease of setup | ✅ Simple | ⚠️ Moderate | ⚠️ Moderate |

| Monitoring | ❌ None | ✅ UI + Logs | ✅ UI + Logs |

| Scalability | ❌ Limited | ✅ Scalable | ✅ Scalable |

| Error handling | ❌ Manual | ✅ Retries | ✅ Retries |

| Backfilling | ❌ No | ✅ Yes | ✅ Yes |

In summary, we'd break it down like this:

- Use Bash + Cron for quick, lightweight automation

- Use Dagster for Pythonic workflows with structured dependencies

- Use Airflow for Python-based scheduling with broad integrations

For a deeper dive into the code, visit the GitHub repository.

We're always up for a rousing chat about workflow automation. Join our Community Forum or our public Slack to share your thoughts.